Step-by-Step Guide to Creating an AI Game Bot with PyTorch and EfficientNet

Are you new to the world of Artificial Intelligence and looking for a fun project to get started? Look no further! This blog will guide you through the creation of an AI model that can play the popular Chrome Dino Game using PyTorch and EfficientNet.

OpenAI, the organization that developed ChatGPT, actually started out by building AI models that could play Atari games. This project, known as the Atari AI, was one of the first demonstrations of deep reinforcement learning and helped pave the way for many subsequent advances in the field of AI. So, building an AI model to play the Chrome Dino Game is actually part of a long tradition of using games to test and develop AI algorithms.

The Chrome Dino Game is a simple yet addictive game that has captured the hearts of millions of players worldwide. The objective of the game is to control a dinosaur and help it run as far as possible without hitting obstacles. With the help of AI, we can create a model that can learn how to play the game and beat our high scores.

This tutorial is for anyone who is interested in building an AI model that can play games. Even if you are new to the concept of AI or deep learning, this tutorial will be a great starting point.

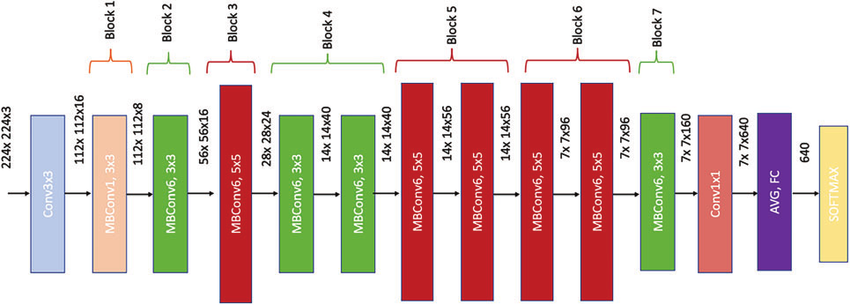

Using PyTorch, a popular deep learning framework, and EfficientNet, a state-of-the-art neural network architecture, we will train a model to analyze the game screen and make decisions based on what it sees. We will start by getting the necessary data, then processing it and finally training the model. By the end of this tutorial, you will have a better understanding of deep learning and how to train your own AI model.

Workflow

There are six primary stages to establishing an AI model:

Data Acquisition: Gathering the necessary data.

Data Preprocessing: Preparing the data for analysis.

Model Design: Constructing the architecture of the AI model.

Model Training: Teaching the model on the prepared data.

Model Evaluation: Assessing the model's performance.

Model Inference: Applying the trained model to make predictions or decisions.

Setting Up Project

Install Anaconda: Download and install the Anaconda distribution from the official website for your operating system.

Create a new project folder. Let’s name it “dino”. Open VS Code in this folder and open terminal.

Create a new conda environment: Open Anaconda Prompt or your terminal and create a new conda environment by running the following command

conda create --name myenv python=3.10

This will create a new environment named myenv with Python 3.10 installed.

- Activate the environment: Once the environment is created, activate it using the following command:

conda activate myenv

- Install PyTorch: Install the PyTorch library with CUDA support (for GPU acceleration) using the following command

conda install pytorch torchvision torchaudio cudatoolkit=11.1 -c pytorch -c conda-forge

This command installs PyTorch, TorchVision, and TorchAudio with the CUDA toolkit version 11.1. You can change the version of the CUDA toolkit as per your requirement.

Test the installation: To verify the PyTorch installation, run the following command to start a Python interpreter in your conda environment:

python

Then, import the PyTorch library and print its version as follows:

import torch

print(torch.__version__)

This should print the version number of PyTorch installed in your environment.

Data Acquisition

We will get our data by taking snapshots of the game screen while a human player is playing the game. captures.py handles this.

import cv2

from PIL import ImageGrab

import numpy as np

import keyboard

import os

from datetime import datetime

current_key = ""

buffer = []

# check if folder named 'captures' exists. If not, create it.

if not os.path.exists("captures"):

os.mkdir("captures")

def keyboardCallBack(key: keyboard.KeyboardEvent):

'''

This function is called when a keyboard event occurs. It stores the key pressed in a buffer and sorts it.

### Arguments :

`key (KeyboardEvent)`

### Returns :

`None`

### Example :

`keyboardCallBack(key)`

'''

global current_key

if key.event_type == "down" and key.name not in buffer:

buffer.append(key.name)

if key.event_type == "up":

buffer.remove(key.name)

buffer.sort() # Arrange the keys pressed in an ascending order

current_key = " ".join(buffer)

keyboard.hook(callback=keyboardCallBack)

i = 0

while (not keyboard.is_pressed("esc")):

# Capture image and save to the 'captures' folder with time and date along with the key being pressed

image = cv2.cvtColor(np.array(ImageGrab.grab(

bbox=(620, 220, 1280, 360))), cv2.COLOR_RGB2BGR)

# if key pressed embed the key pressed in the file name

if len(buffer) != 0:

cv2.imwrite("captures/" + str(datetime.now()).replace("-", "_").replace(":",

"_").replace(" ", "_")+" " + current_key + ".png", image)

# if no key pressed embed 'n' in the file name

else:

cv2.imwrite("captures/" + str(datetime.now()).replace("-",

"_").replace(":", "_").replace(" ", "_") + " n" + ".png", image)

i = i+1

This script captures screenshots using Python libraries PIL and OpenCV, saving them as PNG files in a specified directory. The screenshots are obtained using the ImageGrab module, with the region specified by the bbox argument. Keyboard events are also captured using the keyboard library, appending pressed keys to a buffer and removing them upon release, which is then saved as a string in current_key.

Each screenshot is saved with a filename containing the current iteration's value (incremented with each loop) and the current_key state. If no keys are pressed during capture, "n" is included in the filename instead of a key name.

This code serves as a foundation for creating an image dataset for machine learning tasks like object recognition or image classification. By capturing and labeling images with corresponding key presses, a dataset can be built to train a model in recognizing images and predicting key presses.

Run this python file and start playing the game. Play for atleast 20 different runs to get a good dataset.

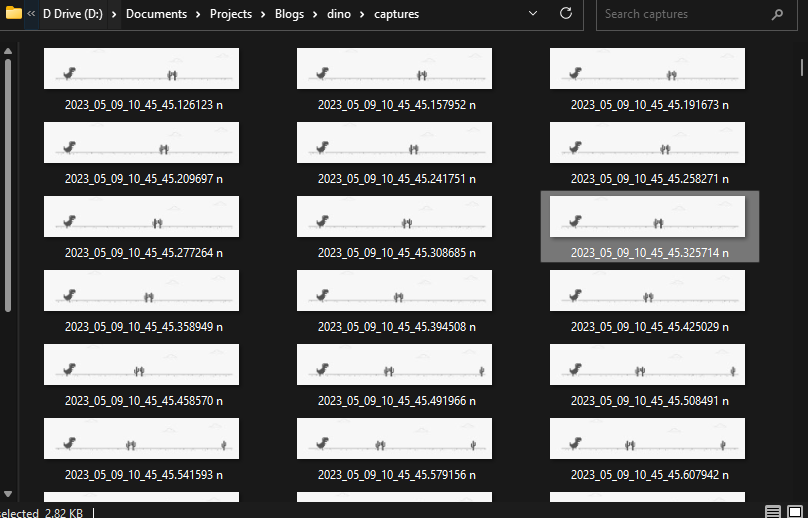

This is how the “captures” folder should look once all the images are captured. You can always run the script again and add more training data.

Data Preprocessing

Next we need a script to process the images that we have captured and turn them into a dataset that our model can understand. Create a new process.py file.

import pandas as pd

import matplotlib.pyplot as plt

import os

import csv

labels = []

dir = 'captures' # directory to get the captured images from

# get the labels for each image in the directory

for f in os.listdir(dir):

key = f.rsplit('.', 1)[0].rsplit(" ", 1)[1]

if key == "n":

labels.append({'file_name': f, 'class': 0})

elif key == "space":

labels.append({'file_name': f, 'class': 1})

field_names = ['file_name', 'class']

# write the labels to a csv file

with open('labels_dino.csv', 'w') as csvfile:

writer = csv.DictWriter(csvfile, fieldnames=field_names)

writer.writeheader()

writer.writerows(labels)

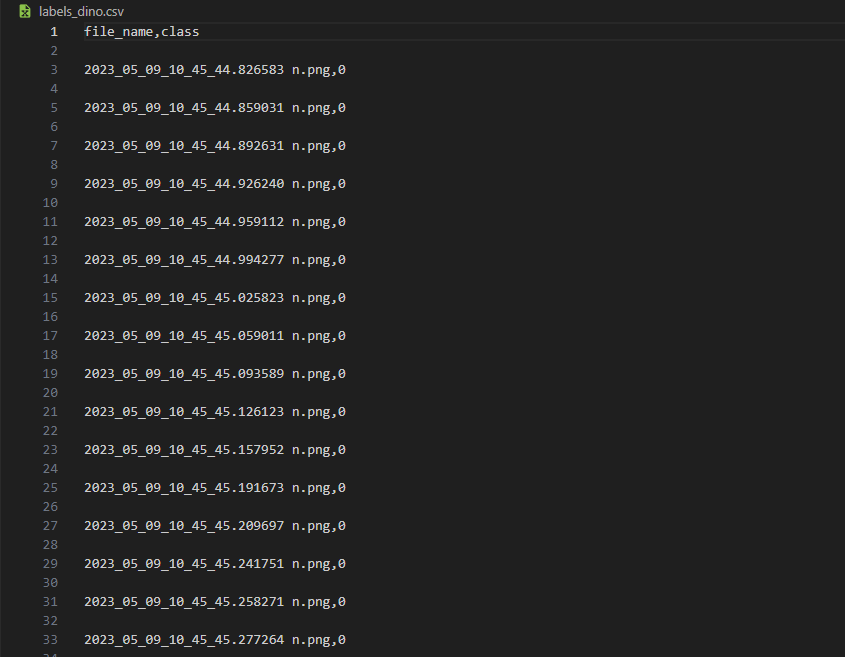

In this code excerpt, we're generating labels for images stored in a directory and then saving them to a CSV file.

Initially, we specify a directory named "dir" containing the captured images. Subsequently, we loop through each file in the directory using the os.listdir() function.

For each file, we extract the class label from its filename using string manipulation techniques. If the filename ends with "n", we assign the label 0; otherwise, if it ends with "space", we assign the label 1.

Next, we gather the labels into a list of dictionaries, where each dictionary holds the filename and its corresponding class label.

Finally, we utilize the csv module to write these labels to a CSV file named "labels_dino.csv". We define the field names for the CSV file and employ the DictWriter method to perform the writing process. Initially, we write the header row containing the field names, followed by using the writerows method to write the labels for each image in the directory to the CSV file.

The resulting "labels_dino.csv" file should resemble the following structure.

Model Design

For creating our Model we first need to create a custom Pytorch Dataset. We will call this DinoDataset. Start by creating a new notebook train.ipynb

Lets import all dependencies:

from torch.utils.data import Dataset, DataLoader

import cv2

from PIL import Image

import pandas as pd

import torch

import os

from torchvision.transforms import CenterCrop, Resize, Compose, ToTensor, Normalize

import matplotlib.pyplot as plt

import pandas as pd

from sklearn.model_selection import train_test_split

import torchvision.models

import torch.optim as optim

from tqdm import tqdm

import gc

import numpy as np

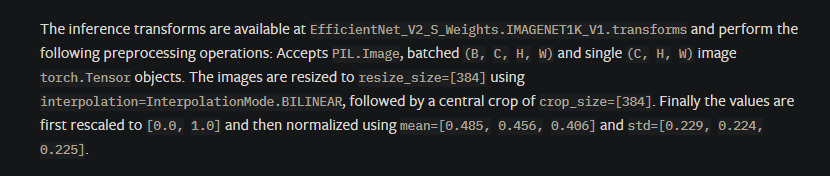

Now let’s create an image transformation pipeline that is required for EfficientNet v2

transformer = Compose([

Resize((480,480)),

CenterCrop(480),

Normalize(mean =[0.485, 0.456, 0.406], std =[0.229, 0.224, 0.225] )

])

The values required are given in the PyTorch documentation of EfficientNet v2.

Now let’s create our DinoDataset

class DinoDataset(Dataset):

def __init__(self, dataframe, root_dir, transform = None):

"""

Args:

csv_file (string): Path to the csv file with annotations.

root_dir (string): Directory with all the images.

transform (callable, optional): Optional transform to be applied

on a sample.

"""

self.key_frame = dataframe

self.root_dir = root_dir

self.transform = transform

def __len__(self):

return len(self.key_frame)

def __getitem__(self, idx):

if torch.is_tensor(idx):

idx = idx.to_list()

img_name = os.path.join(self.root_dir, self.key_frame.iloc[idx,0])

image = Image.open(img_name)

image = ToTensor()(image)

label = torch.tensor(self.key_frame.iloc[idx, 1])

if self.transform:

image = self.transform(image)

return image, label

This custom dataset class, called DinoDataset, is built upon the PyTorch Dataset class. It requires three arguments:

"dataframe": a pandas dataframe containing filenames and labels for each image.

"root_dir": the root directory where the images are stored.

"transform": an optional transformation to apply to the images.

The len method returns the dataset's length, which is the total number of images.

The getitem method loads images and their corresponding labels. It takes an index "idx" as input and returns the image along with its label. The image is loaded using PIL.Image.open, converted into a PyTorch tensor using ToTensor, and the label is fetched from the dataframe using iloc. If a transformation is specified, it's applied to the image before returning it.

Model Training

key_frame = pd.read_csv("labels.csv") #importing the csv file with the labels of the key frames

train,test = train_test_split(key_frame, test_size = 0.2) #splitting the data into train and test sets

train = pd.DataFrame(train)

test = pd.DataFrame(test)

batch_size = 4

trainset = DinoDataset(root_dir = "captures", dataframe = train, transform = transformer)

trainloader = torch.utils.data.DataLoader(trainset, batch_size = batch_size)

testset = DinoDataset(root_dir = "captures", dataframe = test, transform = transformer)

testloader = torch.utils.data.DataLoader(testset, batch_size = batch_size)

In this code snippet, the dataset is split into training and testing sets using the train_test_split function from scikit-learn, with a 20% test size. The resulting splits are stored in the train and test variables as pandas DataFrames.

Then, a batch size of 4 is defined, and the DinoDataset class is employed to create PyTorch DataLoader objects for both the training and testing sets. The root_dir argument is configured to "captures," which is the directory containing the captured images, while the transform argument is set to "transformer," which represents the data preprocessing pipeline defined earlier. The resulting DataLoader objects are trainloader and testloader, serving as conduits to provide data to the neural network during training and testing stages, respectively.

Higher batch_size values can be utilized if access to a high-end GPU is available, but for now, a smaller batch size is chosen.

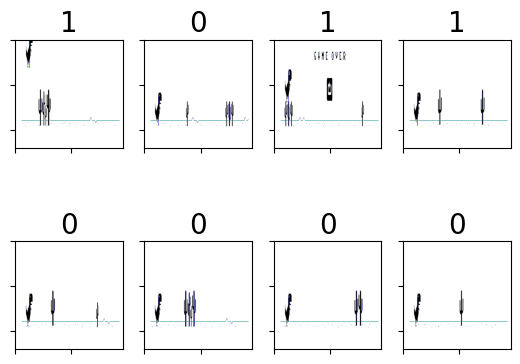

Let's examine the images within one of the batches in the DataLoader.

dataiter = iter(trainloader)

images, labels = next(dataiter)

for i in range(len(images)):

ax = plt.subplot(2, 4, i + 1)

image = (images[i].permute(1,2,0)*255.0).cpu()

ax.set_title(labels[i].item(), fontsize=20) # Setting the title of the subplot

ax.set_xticklabels([]) # Removing the x-axis labels

ax.set_yticklabels([]) # Removing the y-axis labels

plt.imshow(image) # Plotting the image

The number on top of each image shows the key that was pressed when that image was taken . 1 is for “space” and 0 is for no key pressed.

Creating the Model

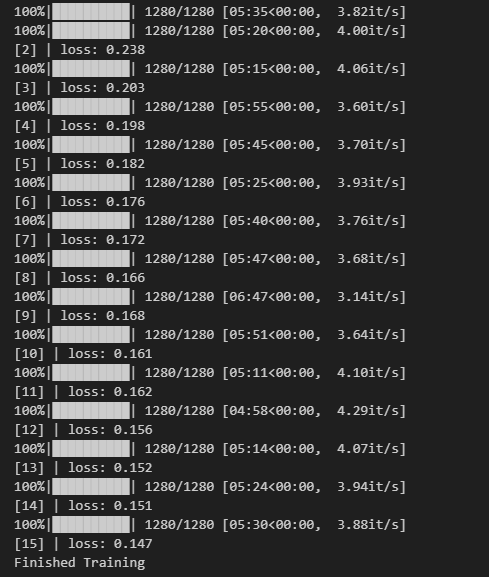

epochs = 15 # number of training passes over the mini batches

loss_container = [] # container to store the loss values after each epoch

for epoch in range(epochs): # loop over the dataset multiple times

running_loss = 0.0

for data in tqdm(trainloader, position=0, leave=True):

# get the inputs; data is a list of [inputs, labels]

inputs, labels = data

inputs = inputs.to(device)

labels = labels.to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_container.append(running_loss)

print(f'[{epoch + 1}] | loss: {running_loss / len(trainloader):.3f}')

running_loss = 0.0

print('Finished Training')

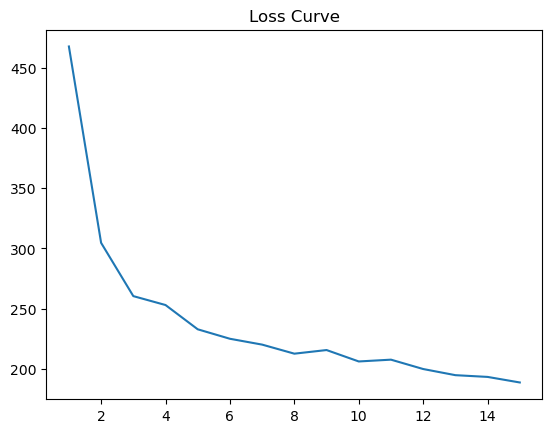

# plot the loss curve

plt.plot(np.linspace(1, epochs, epochs).astype(int), loss_container)

# clean up the gpu memory

gc.collect()

torch.cuda.empty_cache()

This is the training loop for the model. The for loop iterates over a fixed number of epochs (15 in this case), during which the model is trained on the dataset.

The inner for loop uses a DataLoader object to load the dataset in batches. In each iteration, the inputs and labels are loaded and sent to the device (GPU if available). The optimizer’s gradient is then zeroed, and the forward pass is performed on the inputs. The output of the model is then compared to the labels using the Cross Entropy Loss criterion. The loss is backpropagated through the model, and the optimizer’s step method is called to update the model’s weights.

The loss is accumulated over the epoch to get the total loss for that epoch. At the end of the epoch, the model is evaluated on the test set to check its performance on unseen data.

Note that tqdm is used to display a progress bar for each batch of data in the training loop.

This is how the loss curve looks like. Maybe we can keep running the traininig loop for more epochs.

We can also save our model using the following code

PATH = 'efficientnet_s.pth'

torch.save(model.state_dict(), PATH)

Model Evaluation

Let’s load a new EfficientNet Model that uses the weights we saved in the last step.

saved_model = torchvision.models.efficientnet_v2_s()

saved_model.classifier = torch.nn.Linear(in_features = 1280, out_features = 2)

saved_model.load_state_dict(torch.load(PATH))

saved_model = saved_model.to(device)

saved_mode = saved_model.eval()

correct = 0

total = 0

with torch.no_grad():

for data in tqdm(testloader):

images,labels = data

images = images.to(device)

labels = labels.to(device)

outputs = saved_model(images)

predicted = torch.softmax(outputs,dim = 1).argmax(dim = 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'\\n Accuracy of the network on the test images: {100 * correct // total} %')

This code snippet evaluates the performance of a trained model on the test set.

First, the correct and total variables are initialized to 0. Then, a loop over the test set begins, using the testloader to load a batch of images and labels at each iteration.

Within the loop, the images and labels are moved to the specified device for training (here, "cuda"). The trained model is utilized to make predictions on the input images.

The torch.softmax() function is employed to convert the model outputs into probabilities, followed by the argmax() function to determine the predicted class for each image. The count of correctly classified images is computed by comparing the predicted and true labels.

The total variable is incremented by the size of the current batch, and the correct variable is incremented by the number of correctly classified images in the batch.

Upon completion of the loop, the percentage accuracy of the model on the test set is printed to the console. In this instance, the accuracy is reported to be 91%, which indicates good performance for playing the game. Further improvement opportunities exist through hyperparameter tuning, particularly with the optimizer settings. Future blogs will delve deeper into hyperparameter tuning using tools like weights and biases.

Playing The Game

Create a new file dino.py. Run this file and come to the dino game screen and watch your AI model play the game.

import torch

from torchvision.models.efficientnet import efficientnet_v2_s

import keyboard

from PIL import Image, ImageGrab

import numpy as np

from torchvision.transforms import Compose, Resize, CenterCrop, ToTensor, Normalize

from tqdm import tqdm

device = "cuda" if torch.cuda.is_available() else "cpu"

model = efficientnet_v2_s()

model.classifier = torch.nn.Linear(in_features = 1280, out_features = 2)

model.load_state_dict(torch.load("models/efficientnet_s.pth"))

model.to(device)

model.eval()

transformer = Compose([

Resize([480,480]),

CenterCrop(480),

Normalize(mean =[0.485, 0.456, 0.406], std =[0.229, 0.224, 0.225])

])

def generator():

while(not keyboard.is_pressed("esc")):

yield

for _ in tqdm(generator()):

image = ImageGrab.grab(bbox = (620,220,1280,360))

image = ToTensor()(image)

image = image.to(device)

image = transformer(image)

outputs = model(image[None , ...])

_,preds = torch.max(outputs.data, 1)

if preds.item() == 1:

keyboard.press_and_release("space")

The code imports necessary libraries like EfficientNetV2-S model, keyboard, PIL, numpy, and tqdm.

It loads a pre-trained EfficientNetV2-S model, adds a new classifier layer, and loads weights from a saved checkpoint. The model is moved to the GPU for faster processing and set to evaluation mode.

The transformer variable applies preprocessing steps to captured screen images, resizing, center cropping, and normalizing them.

A generator function waits until the "esc" key is pressed.

A loop continuously captures screen images, converts them to PyTorch tensors, preprocesses them, and feeds them into the model to get predicted probabilities. If the model predicts a "jump" action, it simulates a spacebar press.

This loop runs until the "esc" key is pressed, with progress tracked using tqdm. Your model should now be able to play the dino game, at least until birds appear